RESEARCH

Adversarial Robustness and Generalization

Quick links: Paper | Pre-Print | Short Paper | CVPR Poster | UDL Poster | HLF Poster | BibTeX | Code

Abstract

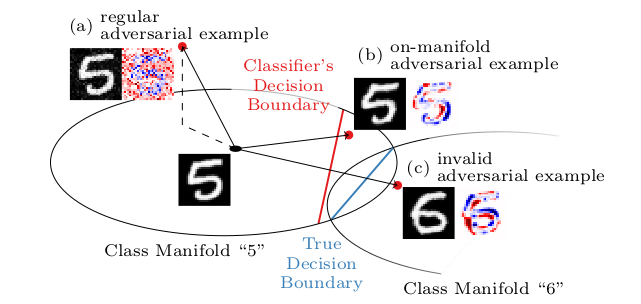

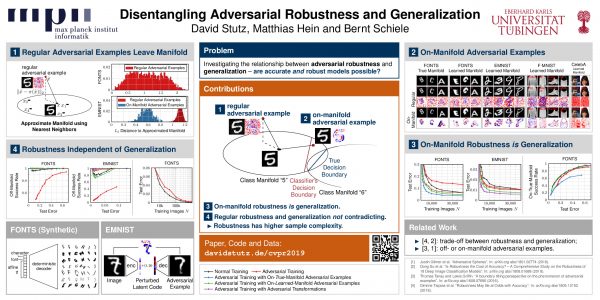

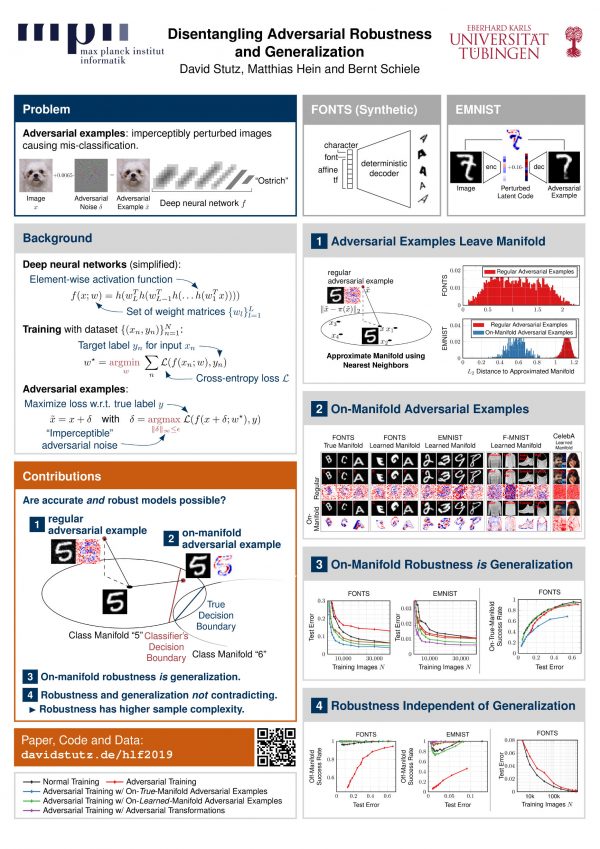

Obtaining deep networks that are robust against adversarial examples and generalize well is an open problem. A recent hypothesis [][] even states that both robust and accurate models are impossible, i.e., adversarial robustness and generalization are conflicting goals. In an effort to clarify the relationship between robustness and generalization, we assume an underlying, low-dimensional data manifold and show that: 1. regular adversarial examples leave the manifold; 2. adversarial examples constrained to the manifold, i.e., on-manifold adversarial examples, exist; 3. on-manifold adversarial examples are generalization errors, and on-manifold adversarial training boosts generalization; 4. regular robustness and generalization are not necessarily contradicting goals. These assumptions imply that both robust and accurate models are possible. However, different models (architectures, training strategies etc.) can exhibit different robustness and generalization characteristics. To confirm our claims, we present extensive experiments on synthetic data (with known manifold) as well as on EMNIST [], Fashion-MNIST [] and CelebA [].

Paper

The paper is available from CVF or on ArXiv:

@article{Stutz2019CVPR,

author = {David Stutz and Matthias Hein and Bernt Schiele},

title = {Disentangling Adversarial Robustness and Generalization},

booktitle = {IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

publisher = {IEEE Computer Society},

year = {2019}

}

Short paper part of the ICML'19 Workshop on Uncertainty and Robustness in Deep Learning:

Short PaperPosters

Code & Data

The code is available in the following repository:

Code on GitHubThe repository includes installation instructions and a getting started guide for reproducing the experiments presented in the paper. Some components (attacks, utilities, adversarial training etc.) of the code can also be used individually.

The data, in HDF5 format, required for the experiments can be downloaded below:

| File | Link |

|---|---|

| FONTS | cvpr2019_adversarial_robustness_fonts.tar.gz |

| EMNIST | cvpr2019_adversarial_robustness_emnist.tar.gz |

| Fashion-MNIST | cvpr2019_adversarial_robustness_fashion.tar.gz |

| Pre-Trained VAE-GANs | cvpr2019_adversarial_robustness_manifolds.tar.gz |

Due to the license of CelebA, the dataset cannot be offered as download in the right format. Code for converting the dataset is included in the repository.

News & Updates

Apr 7, 2019. Code and data are available on GitHub: davidstutz/cvpr2019-adversarial-robustness.

Apr 5, 2019. A short version of the paper will be presented at the ICML'19 Workshop on Uncertainty and Robustness in Deep Learning.

Mar 1, 2019. The paper was accepted at CVPR'19.

References

- [] D. Su, H. Zhang, H. Chen, J. Yi, P.-Y. Chen, and Y. Gao. Is robustness the cost of accuracy? – a comprehensive study on the robustness of 18 deep image classification models. arXiv.org, abs/1808.01688, 2018.

- [] D. Tsipras, S. Santurkar, L. Engstrom, A. Turner, and A. Madry. Robustness may be at odds with accuracy. arXiv.org, abs/1805.12152, 2018.

- [] G. Cohen, S. Afshar, J. Tapson, and A. van Schaik. EMNIST: an extension of MNIST to handwritten letters. arXiv.org, abs/1702.05373, 2017.

- [] H. Xiao, K. Rasul, and R. Vollgraf. Fashion-MNIST: a novel image dataset for benchmarking machine learning algorithms. arXiv.org, abs/1708.07747, 2017.

- [] Z. Liu, P. Luo, X. Wang, and X. Tang. Deep learning face attributes in the wild. In ICCV, 2015.