RESEARCH

Improved 3D Shape Completion

Quick links: Paper | Pre-Print | 3-Page Summary | MLSS Poster | BibTeX | Code and Data

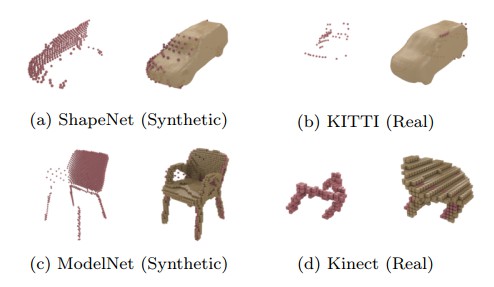

This page presents a follow-up work on our CVPR'18 paper, we improved the proposed weakly-supervised 3D shape completion approach, referred to as amortized maximum likelihood (AML), as well as created two high-quality, challenging, synthetic benchmarks based on ShapeNet [] and ModelNet []. We also presented extensive experiments on real data from KITTI [] and Kinect []. The pre-print itself, a more detailed abstract as well as source code and data will be available below.

This work is based on our earlier CVPR'18 paper, but significantly improves results by utilizing an improved model and more detailed benchmarks. See below for a detailed description of the improvements over our CVPR'18 paper.

Abstract

We address the problem of 3D shape completion from sparse and noisy point clouds, a fundamental problem in computer vision and robotics. Recent approaches are either data-driven or learning-based: Data-driven approaches rely on a shape model whose parameters are optimized to fit the observations; Learning-based approaches, in contrast, avoid the expensive optimization step by learning to directly predict complete shapes from incomplete observations in a fully-supervised setting. However, full supervision is often not available in practice. In this work, we propose a weakly-supervised learning-based approach to 3D shape completion which neither requires slow optimization nor direct supervision. While we also learn a shape prior on synthetic data, we amortize, i.e., learn, maximum likelihood fitting using deep neural networks resulting in efficient shape completion without sacrificing accuracy. On synthetic benchmarks based on ShapeNet [] and ModelNet [] as well as on real robotics data from KITTI [] and Kinect [], we demonstrate that the proposed amortized maximum likelihood approach is able to compete with the fully supervised baseline of [] and outperforms the data-driven approach of [], while requiring less supervision and being significantly faster.

Differences to our CVPR'18 Work

This ArXiv pre-print is a significant extension of our previous work accepted to CVPR'18 [] with the following contributions:

- We implemented a completely new pipeline for synthetic data generation to address shortcomings with respect to the visual quality of the predicted shapes. In particular, we used volumetric fusion to obtain detailed, watertight meshes, manually selected $220$ models from ShapeNet [] or ModelNet [] and computed both occupancy grids and signed distance functions (SDFs) as well as synthetic observations for experiments.

- Additionally, we extended the proposed, weakly-supervised shape completion approach in order to enforce more variety and increase visual quality in the predicted, completed shapes.

- We present extensive experiments on ModelNet [] considering both category-specific and category-agnostic experiments showing that our approach generalizes across object categories and the level of supervision can be reduced even further.

- We also present experiments on Kinect [], a real-world dataset of chairs and tables, showing that our approach is able to generalize form as few as $30$ training samples.

- We increased the spatial resolution used for occupancy grids and SDFs to up to $64^3$ on ModelNet [] and Kinect [] and $48 \times 108 \times 48$ on ShapeNet [] and KITTI [] to obtain more detailed shape predictions.

- We compare the proposed method with the work by Dai et al. [] as representative of a supervised, learning-based approach as well as an iterative closest point (ICP) baseline based on [].

Download & Citing

The paper is available as pre-print on ArXiv and on Springer Link:

Paper on Springer LinkPaper on ArXiv

@article{Stutz2018IJCV,

author = {David Stutz and Andreas Geiger},

title = {Learning 3D Shape Completion under Weak Supervision},

journal = {International Journal of Computer Vision},

year = {2018},

}

3-Page Summary

Code, Data and Models

The code and data is bundled in the following repository:

Code on GitHubThe repository includes the following sub-repositories:

| Repository | |

|---|---|

| aml-shape-completion | Shape completion implementations: amortized maximum likelihood (AML) (including the VAE shape prior), maximum likelihood (ML), Engelmann et al. [], Dai et al. [], iterative closest point (ICP) and the supervised baseline (Sup). Implementations are mostly in Torch and C++ (for []). Installation requirements and usage instructions are included. Note that the AML version in this repository obtains improved results over our CVPR'18 version at davidstutz/daml-shape-completion. |

| mesh-evaluation | Efficient C++ implementation of mesh-to-mesh distance (accuracy and completeness) as well as mesh-to-point distance; this tool can be used for evaluation. |

| bpy-visualization-utils | Python and Blender tools for visualization of meshes, occupancy grids and point clouds. These tools have been used for visualizations as presented in the paper. |

The above repositories contain the very essentials for reproducing the results reported in the paper. There are, however, some additional repositories containing related tools:

| Repository | |

|---|---|

| mesh-voxelization | Efficient C++ implementation for voxelizing watertight triangular meshes into occupancy grids and/or signed distance functions (SDFs). This tool was used to create the shape completion benchmarks as described below. |

| mesh-fusion | This is a Python implementation of TSDF Fusion using and ; this approach was used to obtain simplified and watertight meshes for our synthetic benchmarks. |

Data downloads can also be found in the main repository; details are given below:

Except for ModelNet10 and Kinect, all downloads include benchmarks for three difference resolutions. On ShapeNet and KITTI, these are $24\times 54\times24$, $32\times72\times32$ and $48\times108\times48$. On ModelNet, these include $32^3$, $48^3$ and $64^3$.

| Download | |

|---|---|

|

ShapeNet: SN-clean: Amazon AWS MPI-INF SN-noisy: Amazon AWS MPI-INF |

The "clean" and "noisy" versions of our ShapeNet benchmark; which means that we synthetically generated observations without or with noise which can be used to benchmark shape completion methods. Note that this is not the same as for our CVPR'18 paper. |

| KITTI: Amazon AWS MPI-INF | Our benchmark derived from KITTI; it uses the ground truth 3D bounding boxes to extract observations from the LiDAR point clouds. It does not include ground truth shapes; however, we tried to generate an alternative by considering the same bounding boxes in different timesteps. Note that this is not the same as for our CVPR'18 paper. |

|

ModelNet: bathtubs: Amazon AWS MPI-INF chairs: Amazon AWS MPI-INF desks: Amazon AWS MPI-INF tables: Amazon AWS MPI-INF |

Single-category benchmarks derived from ModelNet's bathtubs, chairs, desks and tables. |

| ModelNet10: Amazon AWS MPI-INF | Benchmark based on all ten categories from ModelNet10. |

We also provide pre-trained models for the proposed approach and all baselines; the downloads include models on all datasets and for all resolutions.

| Download | |

|---|---|

| AML Models (∼ 2.8GB): Amazon AWS MPI-INF | Pre-trained Torch models for the proposed amortized maximum likelihood (AML) approach. |

| Dai et al. [] Models (∼ 11.6GB): Amazon AWS MPI-INF | Pre-trained Torch models for the fully-supervised baseline of Dai et al. []. |

| Supervised Baseline Models (∼ 1.6GB): Amazon AWS MPI-INF | Pre-trained Torch models of our own fully-supervised baseline. |

| DVAE Shape Prior Models (∼ 2.3GB): Amazon AWS MPI-INF | Pre-trained Torch models of our DVAE shape prior. |

News & Updates

Nov 30, 2018. Data and models are now also available through a server provided by MPI-INF.

Oct 8, 2018. The paper has been accepted at IJCV: link.springer.com/article/10.1007/s11263-018-1126-y.

May 18, 2018. The pre-print is available on ArXiv.

June 7, 2018. Coda, data and models now available on GitHub.

Method

Figure 1 (click to enlarge): Overview of the proposed, weakly-supervised 3D shape completion approach; see below or paper for details.

We propose an amortized maximum likelihood approach for 3D shape completion, see Figure 1, avoiding slow optimization as required by data-driven approaches and the required supervision of learning-based approaches. Specifically, we first learn a shape prior on synthetic shapes using a (denoising) variational auto-encoder. Subsequently, 3D shape completion can be formulated as a maximum likelihood problem. However, instead of maximizing the likelihood independently for distinct observations, we follow the idea of amortized inference and learn to predict the maximum likelihood solutions directly. Towards this goal, we train a new encoder which embeds the observations in the same latent space using an unsupervised maximum likelihood loss. This allows us to learn 3D shape completion in challenging real-world situations, e.g., on KITTI, and obtain sub-voxel accurate results using signed distance functions at resolutions up to $64^3$ voxels.

For experimental evaluation, we introduce two novel, synthetic shape completion benchmarks based on ShapeNet []and ModelNet []. We compare our approach to the data-driven approach by Engelmann et al. [], a baseline inspired by Gupta et al (2015) and the fully-supervised learning-based approach by Dai et al. []; we additionally present experiments on real data from KITTI and Kinect []. Experiments show that our approach outperforms data-driven techniques and rivals learning-based techniques while significantly reducing inference time and using only a fraction of supervision.

Experiments

In the paper, we discuss various experiments on the four constructed benchmark datasets, i.e., ShapeNet, ModelNet, KITTI and Kinect. We consider the single-category case, the multi-category case as well as multiple resolutions. On KITTI and Kinect, we demonstrate that our approach is able to learn on data without ground truth and visually outperform related work. We also present insights regarding the learned latent space (of the shape prior) and the embedding learned during the inference step (see Figure 1).

Figure 1 (click to enlarge): Qualitative results of the proposed AML approach in comparison to related work by Engelmann et al. (Eng16) and Dai et al. (Dai17) on ShapeNet and ModelNet.

For example, Figure 2 presents experiments on ShapeNet and ModelNet, considering one object category at a time at low resolution (specifically, $24 \times 54 \times 24$ and $32^3$, respectively). As can be seen, our approach outperforms the data-driven approach [] — referred to as Eng16 — and is able to compete with [] — indicated as Dai17. This is remarkable, as we use up to $96\%$ less supervision compared to Dai17.

Figure 3 (click to enlarge): Multi-category results on ModelNet (left) and results on KITTI (right). For ModelNet, we used a resolution of $32^3$; on KITTI we use $32 \times 72 \times 32$ and additionally show partial ground truth in green.

In Figure 3, we also show some multi-category reuslts (left) as well as results on KITTI (right) — note that on KITTI, there is only partial ground truth available. The supervised baseline of Dai et al. [] (Dai17) was trained on ShapeNet, where we put significant effort into modeling KITTI's sensor and noise statistics. The experiments show that our approach performs well under even weaker supervision, i.e., when considering multiple object categories, and is able to learn on real data from KITTI, despite the noise and sparsity.

Conclusion

We presented a novel, weakly-supervised learning-based approach to 3D shape completion from sparse and noisy point cloud observations. We used a (denoising) variational auto-encoder to learn a latent space of shapes for one or multiple object categories using synthetic data. Based on the learned generative model, we formulated 3D shape completion as a maximum likelihood problem. In a second step, we then fixed the learned generative model and trained a new recognition model to amortize the maximum likelihood problem. Compared to related data-driven approaches, our approach offers fast inference at test time; in contrast to other learning-based approaches we do not require full supervision during training. On two newly created synthetic shape completion benchmarks, derived from ShapeNet’s cars and ModelNet10, as well as on real data from KITTI and, we demonstrated that AML outperforms related data-driven approaches while being significantly faster. We further showed that AML is able to compete with fully-supervised approaches, both quantitatively and qualitatively, while using only $3-10\%$ supervision or less. We additionally showed that AML is able to generalize across object categories without category supervision during training.

References

- [] Stutz D, and Geiger A (2018) Learning 3D Shape Completion from Laser Scan Data with Weak Supervision. In: Proc. IEEE Conf. on Computer Vision and Pattern Recognition (CVPR).

- [] Dai A, Qi CR, Nießner M (2017) Shape completion using 3d-encoder-predictor cnns and shape synthesis. In: Proc. IEEE Conf. on Computer Vision and Pattern Recognition (CVPR).

- [] Engelmann F, St¨uckler J, Leibe B (2016) Joint object pose estimation and shape reconstruction in urban street scenes using 3D shape priors. In: Proc. of the German Conference on Pattern Recognition (GCPR).

- [] Gupta S, Arbel´aez PA, Girshick RB, Malik J (2015) Aligning 3D models to RGB-D images of cluttered scenes. In: Proc. IEEE Conf. on Computer Vision and Pattern Recognition (CVPR).

- [] Wu Z, Song S, Khosla A, Yu F, Zhang L, Tang X, Xiao J (2015) 3d shapenets: A deep representation for volumetric shapes. In: Proc. IEEE Conf. on Computer Vision and Pattern Recognition (CVPR).

- [] Chang AX, Funkhouser TA, Guibas LJ, Hanrahan P, Huang Q, Li Z, Savarese S, Savva M, Song S, Su H, Xiao J, Yi L, Yu F (2015) Shapenet: An information-rich 3d model repository. arXivorg 1512.03012.

- [] Geiger A, Lenz P, Urtasun R (2012) Are we ready for autonomous driving? The KITTI vision benchmark suite. In: Proc. IEEE Conf. on Computer Vision and Pattern Recognition (CVPR).

- [] Yang B, Rosa S, Markham A, Trigoni N, Wen H (2018) 3d object dense reconstruction from a single depth view. arXivorg abs/1802.00411.