A short version of this paper was accepted at UDL 2020.

Project page, including code and pre-trained models, can be found here.

Abstract

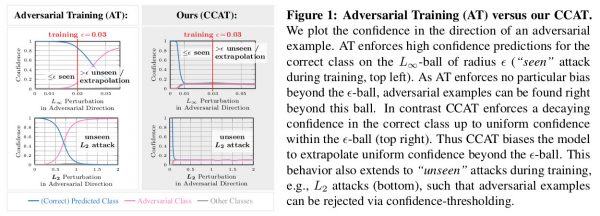

Adversarial training yields robust models against a specific threat model, e.g., $L_\infty$ adversarial examples. Typically robustness does not generalize to previously unseen threat models, e.g., other $L_p$ norms, or larger perturbations. Our confidence-calibrated adversarial training (CCAT) tackles this problem by biasing the model towards low confidence predictions on adversarial examples. By allowing to reject examples with low confidence, robustness generalizes beyond the threat model employed during training. CCAT, trained only on $L_\infty$ adversarial examples, increases robustness against larger $L_\infty$, $L_2$, $L_1$ and $L_0$ attacks, adversarial frames, distal adversarial examples and corrupted examples and yields better clean accuracy compared to adversarial training. For thorough evaluation we developed novel white- and black-box attacks directly attacking CCAT by maximizing confidence. For each threat model, we use $7$ attacks with up to $50$ restarts and $5000$ iterations and report worst-case robust test error, extended to our confidence-thresholded setting, across all attacks.

Revised Paper on ArXiv

@article{Stutz2020ICML,

author = {David Stutz and Matthias Hein and Bernt Schiele},

title = {Confidence-Calibrated Adversarial Training: Generalizing to Unseen Attacks},

journal = {Proceedings of the International Conference on Machine Learning {ICML}},

year = {2020}

}

Code and Pre-Trained Models

The code for training and evaluation (attacks and metrics) including pre-trained models is available on GitHub:

Code on GitHubThe repository includes installation instructions and documentation.