RESEARCH

Fragile Features, Batch Normalization and Adversarial Training

This is work led by Nils Walter.

Abstract

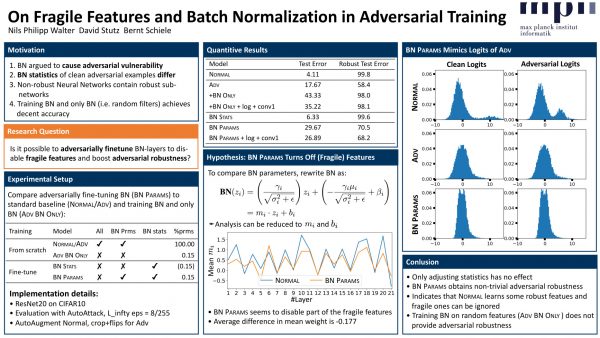

Modern deep learning architecture utilize batch normalization (BN) to stabilize training and improve accuracy. It has been shown that the BN layers alone are surprisingly expressive. In the context of robustness against adversarial examples, however, BN is argued to increase vulnerability. That is, BN helps to learn fragile features. Nevertheless, BN is still used in adversarial training, which is the de-facto standard to learn robust features. In order to shed light on the role of BN in adversarial training, we investigate to what extent the expressiveness of BN can be used to robustify fragile features in comparison to random features. On CIFAR10, we find that adversarially fine-tuning just the BN layers can result in non-trivial adversarial robustness. Adversarially training only the BN layers from scratch, in contrast, is not able to convey meaningful adversarial robustness. Our results indicate that fragile features can be used to learn models with moderate adversarial robustness, while random features cannot.

Paper

The paper is available on ArXiv:

@article{Walter2022ARXIV,

author = {Walter, Nils Philipp and Stutz, David and Schiele, Bernt},

title = {On Fragile Features and Batch Normalization in Adversarial Training},

journal = {CoRR},

volume = {abs/2204.12393},

year = {2022}

}

Poster

Poster as PDFNews & Updates

May 29, 2022. The paper was accepted at the Art of Robustness Workshop at ICML 2022.

April 26, 2022. The paper is available at ArXiv.