RESEARCH

Random and Adversarial Bit Error Robustness

Quick links: Paper | Paper on IEEExplore

Abstract

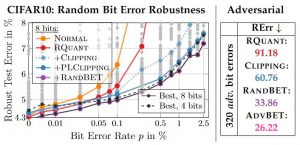

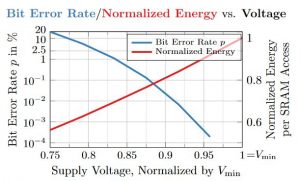

Figure 1: Left: Average bit error rate $p$ (blue, lef y-axis) measured on 32 SRAM arrays of size $512\times 64$ and energy (ref, right y-axis) vs. voltage (x-axis). Reducing voltage reduces energy consumption, bit introduces exponentially increases rates of bit errors. Middle: Robust test error RErr after injecting random bit errors plotted against bit error rate. Our robust quantization (red), combined with (per-layer) weight clipping (blue) and random bit error training (violet) improves robustness considerably. Right: RErr against up to $320$ adversarial bit errors, with adversarial bit error training reducing RErr from above $90\%$ to $26.22\%$.

Deep neural network (DNN) accelerators received considerable attention in recent years due to the potential to save energy compared to mainstream hardware. Low-voltage operation of DNN accelerators allows to further reduce energy consumption significantly, however, causes bit-level failures in the memory storing the quantized DNN weights. Furthermore, DNN accelerators have been shown to be vulnerable to adversarial attacks on voltage controllers or individual bits. In this paper, we show that a combination of robust fixed-point quantization, weight clipping, as well as random bit error training (RandBET) or adversarial bit error training (AdvBET) improves robustness against random or adversarial bit errors in quantized DNN weights significantly. This leads not only to high energy savings for low-voltage operation as well as low-precision quantization, but also improves security of DNN accelerators. Our approach generalizes across operating voltages and accelerators, as demonstrated on bit errors from profiled SRAM arrays, and achieves robustness against both targeted and untargeted bit-level attacks. Without losing more than 0.8%/2% in test accuracy, we can reduce energy consumption on CIFAR10 by 20%/30% for 8/4-bit quantization using RandBET. Allowing up to 320 adversarial bit errors, AdvBET reduces test error from above 90% (chance level) to 26.22% on CIFAR10.

Differences to MLSys'21 Work

Compared to our previous MLSys'21 work on random bit error robustness, this extended paper makes the following additional contributions:

- As extension to the proposed weight clipping and to further improve robustness of deep neural networks (DNNs) against random bit errors in (quantized) weights, we consider per-layer weight clipping. We also combine per-layer weight clipping with our random bit error training and demonstrate significant robustness improvements.

- Besides random bit errors in the (quantized) weights, we also consider bit errors in the inputs and activations, which has been neglected in related work. In practice, however, inputs and activations are also subject to bit errors caused by low-voltage operation of the accelerator's memory. In both cases, bit errors have devastating impact on DNN accuracy. However, injecting such bit errors during training can improve robustness considerably.

- Beyond random bit errors, we also consider adversarial bit errors. Specifically, we propose a novel, gradient-based attack to adversarially inject bit errors and reduce DNN performance to that of a random classifier. Our attack allows to compute targeted as well as untargeted adversarial bit errors and outperforms the recently proposed bit flip attack (BFA) significantly.

- We further demonstrate that weight clipping and random or adversarial bit error training can improve robustness to adversarial bit errors considerably, which has not been possible so far. In practice, this allows to train DNNs robust against both random and adversarial bit errors, enabling both energy-efficient and secure DNN accelerators.

Paper

The paper is available on ArXiv:

@article{Stutz2022TPAMI,

author = {David Stutz and Nandhini Chandramoorthy and Matthias Hein and Bernt Schiele},

title = {Random and Adversarial Bit Error Robustness: Energy-Efficient and Secure DNN Accelerators},

journal = {IEEE Transactions on Pattern Analysis and Machine Intelligence},

year = {2022},

}

Presentations

Code

Coming soon! Check the code for random bit error robustness in the meantime: GitHub.

News & Updates

June 13, 2022. The paper is now available on IEEExplore.

May 31, 2022. The paper has been accepted at TPAMI.

April 26, 2021. The paper is available on ArXiv.