Ben Recht recently published some blog articles questioning the utility of prediction intervals and sets, especially as obtained using distribution-free, conformal methods. In this article, I want to add some color to the discussion given my experience with applying these methods in various settings.

Let me start with the elephant in the room: do people actually want uncertainty estimates in general? If you ask people in academia or industry, the first answer is usually yes. Somehow, as researchers and engineers, we want to understand when the models we train "know" and when they do not know (i.e., are likely correct or incorrect). However, often it is unclear how exactly uncertainty estimates can be useful. I think the main reason for this is that today's large models are meant to be extremely generic. Uncertainty estimates, however, are mostly relevant when a concrete application is targeted. In such cases, especially in safety-critical domains such as health or autonomous driving, we need our models to make actual decisions and potentially take actions. Here, uncertainty estimates are critical as decisions and actions usually have consequences and we generally want to avoid making bad decisions under high uncertainty.

Thinking in terms of decisions, we also need to consider the end user. Often, we think that providing an uncertainty estimate alongside a decision is a user-friendly approach. However, outputting a confidence alongside a prediction only adds value if we expect this approach to have tangible consequences. For example, if we expect doctors to re-consider the model decision on low confidence. Here, uncertainty estimates can add value in a human-AI setting where we provide an abstention or deferral scheme where we do not make a prediction on low confidence or invoke some other mechanism/human expert.

After having understood the decision making problem at hand, a key limitation of ad-hoc uncertainty estimates such as a neural network confidence is that it lacks some sort of reference. Is a confidence of 0.8 actually high enough to trust the prediction, or are confidences for correct predictions usually around 0.99? As a community, we developed tools to alleviate this problem by having models output a calibrated confidence that ideally reflects the probability of being correct. An example is Platt scaling. However, looking at a single prediction with an unknown label and a corresponding confidence still lacks some reference.

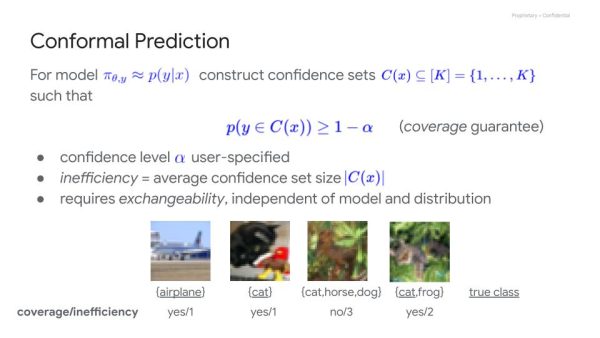

Overview of conformal prediction from one of my recent talks.

This is where — in my mind — uncertainty estimation techniques such as conformal prediction come into play. These methods tie a confidence estimate to a reference guarantee, usually called coverage guarantee. Instead of providing a confidence, the default format of uncertainty estimates are prediction intervals and sets (see figure above; many alternative formats are possible, too). In classification, for example, instead of predicting a single class, we predict a set of classes. On top of the set we tell the user (researcher or end user) that this set is calibrated to guarantee that the true class is included with say 99% probability.

Criticism usually starts with this coverage guarantee. This is because it is a marginal guarantee. It is not only marginal across examples that we predict on, but it is also marginal across the set examples we use to calibrate (this would be the validation set used in Platt scaling). It also assumes that the examples we encounter at test time to come from the same distribution as the calibration examples. Now, the guarantee being marginal across the calibration examples is easily alleviated with enough calibration examples. So, I am convinced that this is not really an issue in practice. Nevertheless, given a concrete test example, the guarantee does not tell us much.

At this point, it is important to realize that most practical methods for calibration exhibit the same problems. Empirical calibration of a model using Platt scaling also works marginally. They assume the test examples to come from the same distribution and are usually to be interpreted marginally across test examples. Only that they do not provide a formal coverage guarantee. )Please do point me to any theoretical results around this type of calibration if there is any.)

Nevertheless, having a coverage guarantee conditional on the test example would be way more compelling. And it is not for lack of trying. There is plenty of work in this direction and in some settings there has been progress. Obtaining coverage guarantees conditional on groups (such as attributes or classes) is fairly easy to achieve by now (assuming enough calibration examples). Still, if I go to the doctor, these types of guarantees are not what I am interested in. Knowing that my diagnosis includes my true sickness with 90% probability across all patients of my age is not more useful than knowing it is included with 90% probability across all patients.

Exceptions to this problem, such as Bayesian methods, have other problems. The prediction intervals I would get using Bayesian methods would be conditional on me as an example/patient, but usually rely on some model assumption. If the model assumption is violated, there is no guarantee whatsoever. In practice, especially in complex tasks, with large models, we often need to make quite strong model assumptions to get things working. But then, these assumptions are very likely to be broken in practice. So, in my view, we have the following alternatives: get conditional guarantees in theory using Bayesian methods that likely do not hold in practice; getting no guarantee whatsoever using empirical methods; or getting a marginal guarantee without any assumptions using the "conformal way". In this comparison, I feel that conformal prediction looks pretty good — it is easy to do, scales well with data, and gives you a marginal guarantee nearly for free. Besides, we can easily do empirical calibration first and conformal calibration on top.

If you are still not convinced that you have nothing to lose, what else do conformal methods have to offer? At this point I want to share two practical insights I gained applying these methods in very practical applications.

First, there are occasionally use cases for prediction sets. For example, physicians are used to give differential diagnoses when seeing patients. These diagnoses may include multiple possible conditions, potentially ranked from more likely to less likely. We can construct such differential diagnoses using conformal prediction sets. However, there may be rather complicated constraints on top of the constructed prediction sets (e.g., hierarchical structure on differential diagnoses, different risk classifications of conditions, etc.). Often, it is non-trivial to calibrate a system with multiple hyper-parameters that govern inference in a way that meets these constraints. In such cases, I view conformal methods as a framework to calibrate a complex system with multiple hyper-parameters with respect to a set of constraints. Sure, we get a marginal guarantee, but first and foremost conformal prediction provides the means to perform this calibration easily in a data-driven and fairly out-of-the-box way. Some examples of such cases are outlined in some of my recent works and methods such as learn then test or conformal risk can easily deal with a variety of different constraints.

Second, conformal prediction is closely related to (multiple) hypothesis testing. In fact, standard conformal prediction is equivalent to testing the hypotheses "k is the true class y" — find a great derivation of my co-author Arnaud Doucet in Appendix B of our recent paper. More precisely, conformal prediction just provides a data-driven way to compute valid p-values in various settings. These p-values can be incredibly useful for decision making in practice. In the simplest example of a binary classification problem, conformal p-values allow to control, for example, the true positive rate at user-specified level at test time without needing re-calibration. It also allows us to easily abstain from a decision or defer to another system. We can also test multiple examples together or combine decisions from multiple systems on the p-value level. Essentially whatever testing scenario is relevant can be applied on top of these p-values. For example, there was a nice paper showing how to use such deferral mechanisms for speeding up inference while lower-bounding (marginal) performance.

Overall, I have come to the following conclusion: In many cases there is no reason not to perform conformal calibration wherever we are calibrating a model or need uncertainty estimates. While we do not get perfectly conditional guarantees, we get a marginal one "for free". This is because these methods do not need more data, extra compute or stronger assumptions than standard, empirical calibration. Often, we can get "more conditional" guarantees on top using extra data or some extra work. In many settings, conformal prediction enables calibration subject to complex constraints, which standard calibration is not well-equipped for.