The Heidelberg Laureate Forum (HLF) invites laureates of the prime computer science (CS) and mathprizes alongside young researchers for a networking conference in beautiful Heidelberg. Specifically, there are six awards that can be considered the Nobel prizes in CS and math: the ACM Turing award, the ACM Prize in Computing, the Abel Prize, the Fields Medal, the Nevanlinna Prize and the IMU Abacus Medal. Each year, different laureates are invited and young researchers purusing their undergraduate, graduate or post-doc studies can apply to join them. Besides an amazing program, the forum is entirely funded by the HLF foundation (HLFF) and supported by several other German science foundations. My participation was sponsored by an Abbe grant from the Carl Zeiss Foundation. As part of the Abbe grant, I also had the opportunity to take over the Twitter/X accound of the foundation during the event. Here are some impressions (click to see the corresponding threads):

From my perspective, there are three main components in the forum's program: talks and panels, "scientific interaction", and networking. Talks come in different formats and their content is entirely up to the laureates. Some laureates give technical talks on their past or current work, others present thoughts on future work and yet others give speeches on important non-technical problems relevant to scientists. For example, Bob Metcalfe gave a speech walking the audience through early computing and networking history as well as his work on ethernet. In contrast, Adi Shamir, Yael Tauman Kalai or Hugo Duminil-Copin gave more technical lectures.

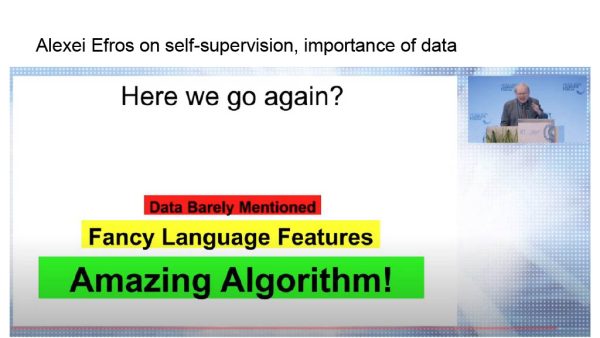

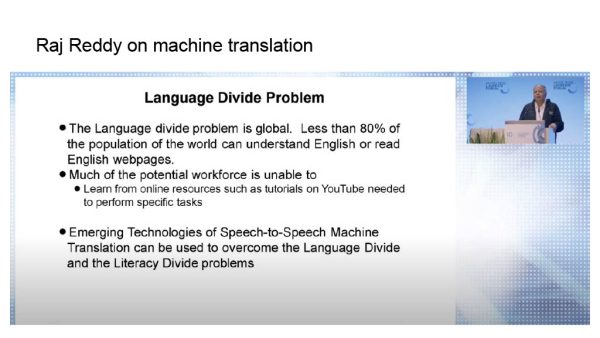

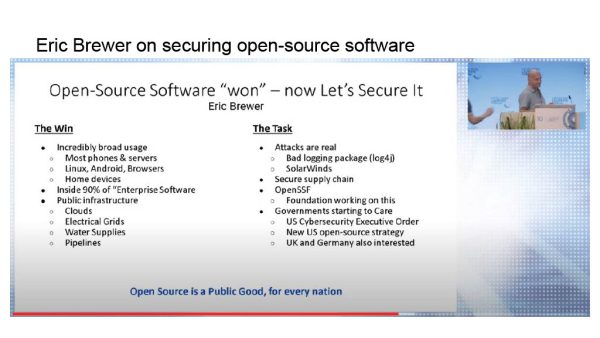

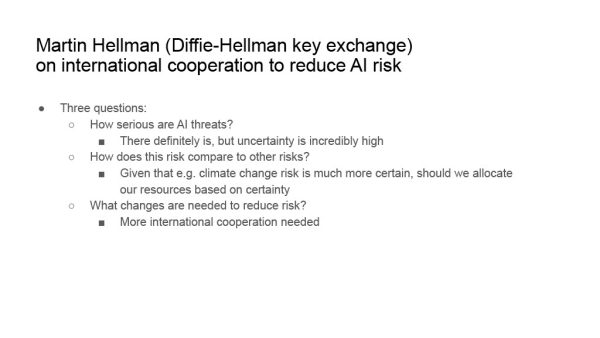

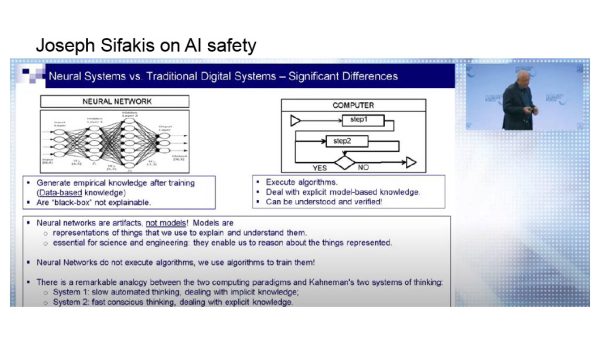

Even though I work in AI safety, I was surprised by how many laureates talked about AI in general and AI safety and risk in specific. Here are some of my take-aways — all talks can be found on YouTube.

Then, there were plenty of panels on topics ranging from interdisciplinary research, over inclusion to generative AI. For example, the topic of generative AI featured Sébastien Bubeck, Björn Ommer, Sanjeev Arora and Margo Seltzer. There was a lively discussion on what "emergent abilities" of recent foundation models are and how suddenly they occur in terms of scale. Personally, I liked Sanjeev Arora's perspective, framing it as "compositional abilities" to avoid the incredibly overloaded term "emergent". I also appreciated Björn Ommer's pespective, describing what happens in text-to-image models as high-dimensional interpolation.

"Scientific interaction" includes either master-classes or Q&A sessions with laureates in smaller groups. These allow much more intimate interaction with laureates. While master-classes usually discuss specific technical topics, the Q&A sessions have mostly been used to learn about academic careers. For example, I joined a Q&A session with Daniel Spielman because he gave incredibly good talks. He shared how he prepares to talks and lectures, how strategically he chooses research topics, how he adivses students and much more.

Finally, there is networking. This is probably the main part of the HLF. There were long coffee breaks, fancy dinners, a boat tour on the Neckar, a tour through the Heidelberg castle and many group activities. My favorite — this and last time — was dinner at the Technik Museum Speyer. Dining below the Spaceshuttle Buran is an incredible experience. In general, I was encouraged to meet different people every day and the HLF did a great job selecting a very diverse set of young researchers.

Overall, I can only recommend young researchers to apply. Application deadline is usually beginning of February each year and involves a CV, a research statement and a mission statement. You can find examples from my application here:

Throughout my bachelor studies, I was drawn to the idea of teaching machines how to „see“. Therefore, all my seminar papers discussed the latest advances in deep learning while my bachelor thesis as well as several internships and research stays addressed various problems in computer vision, including image and video segmentation, keypoint tracking or object detection. In my master thesis, I finally applied deep learning to the challenging problem of 3D reconstruction without ground truth shapes. While this worked incredibly well at the time, I became even more interested in understanding some of the failure modes of deep neural networks. As a result, my PhD focused on identifying, understanding and potentially alleviating such issues. For example, this included so-called adversarial examples, imperceptibly perturbed images causing mis-classification, or the problem of mis-calibration, meaning over-confident but often incorrect predictions. At DeepMind, I am expanding this work by addressing uncertainty estimation, provenance detection, interpretability and fairness. Overall, by understanding and tackling these issues, I aim to make deep learning – and, in extension, artificial intelligence (AI) – safe and trustworthy to use. This is particularly important in applications that are inherently safety-critical, such as health. However, as highlighted by releases such as chatGPT or Stable Diffusion, even seemingly creative applications such as language or image generation can negatively impact individual users as well as the society as whole. Unfortunately, despite progress on individual topics such as robustness, interpretability or fairness, these aspects are still mostly ignored when evaluating and importantly deploying the next generation of large AI models. With my work at DeepMind, I want to contribute to a scientific and scalable approach for rigorously discovering and quantifying these AI risks, taking into account their severity alongside human feedback.

In 2019, applying to the 7th HLF, my mission statement was about asking the right research questions and building relationships with peers and senior researchers. In the meantime, completing my PhD made me comfortable asking tough research questions and attending the HLF in 2019 had an incredible effect on how I built professional relationships. In fact, contacts I made at the HLF started an exciting interdisciplinary research project with IBM Research. With these learnings, I joined DeepMind in its mission to solve humanity's problems using artificial intelligence (AI). Personally, I want to make AI safe for billions to use. Obviously, such ambitious goals require large teams of researchers and engineers. So far, however, I experienced academia as a bottom-up approach to research in which curious researchers with sufficient freedom find unsolved problems and build communities to tackle them. In larger organizations with defined goals, in contrast, there is more need for coordination among groups of researchers – often, in a top-down fashion. This might not only apply to corporate research but also to bigger projects in academia – for example, CERN or the human brain project. Ideally, both approaches complement each other. In practice, however, I experience that they tend to clash and create friction. I find these dynamics quite challenging to navigate as a junior researcher. It requires me to learn about research strategy and organization and develop longer-term research agendas that fit the organization’s mission. From my experience in 2019, I know that HLF is the perfect place to discuss these challenges with accomplished researchers, often directing big research institutes themselves and influencing thousands of researchers, and ambitious peers.