Overview

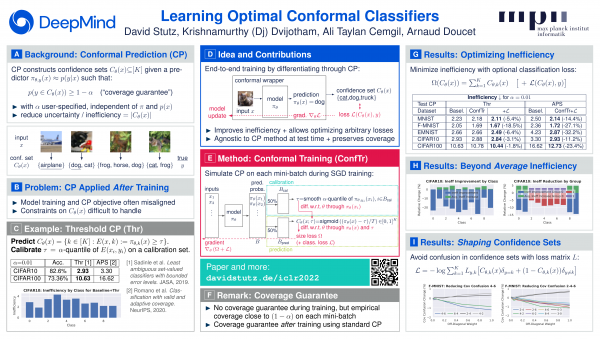

In conformal training, a simple differentiable threshold conformal predictor is used during training in order to directly optimize losses defined on the predicted confidence sets. For example, let $E(x, k)$ be a conformity score, e.g., the logit or softmax probability for class $k$ of a classifier. Then, we can perform conformal prediction by defining the confidence sets as $C(x) := \{k: E(x, k) \geq \tau\}$ and calibrate the threshold $\tau$ on a calibration set $\{(x_i, y_i\}_{i = 1}^n$ using the $\alpha(1 + 1/n)$-quantile of the true scores $\{E(x_i,y_i)\}_{i = 1}^n$. This guarantees that the true label $y$ for test example $x$ is included in $C(x)$ with probability at least $1 - \alpha$ (where probability is marginal across calibration and test examples). For conformal training, this is made differentiable by defining "smooth" confidence sets $C_k(x) := \sigma((E(x,k) - \tau)/T) \in [0, 1]$ with temperature $T$. The calibration procedure can be made differentiable using a differentiable sorter (for the quantile computation). Then, conformal training performs calibration and prediction on each mini-batch and optimizes, e.g., the expected set size $\sum_k C_k(x)$.

The paper shows that this reduces the average set size, often called inefficiency, but can also be used to empirically influence which classes are included in the confidence sets $C(x)$. However, conformal training was developed with split conformal prediction in mind. Moreover, conformal prediction or distribution-free uncertainty quantification in general has made quite a few advances since we worked on conformal training. Besides guaranteeing coverage, many methods allow risk control on arbitrary risks and in the face of distributions shifts. I think that conformal training can be useful in many of these settings. Thus, I want to present some research ideas for anyone to pick up and work on — based on our open-source Jax implementation (or this Julia re-implementation).

Transductive or Cross-Conformal Training

In conformal training, we use split conformal prediction at two places: during training on each mini-batch and after training for calibration. During training this is less critical in terms of data efficiency as we randomize batches as in standard stochastic gradient descent training. After training, however, we might want to make better use for our calibration examples. This can be achieved using transductive or some form of cross-conformal/jackknife+ conformal prediction. Especially in the latter case, conformal training could be very useful. Essentially, a simple research question is to what extent conformal training can improve inefficiency, especially for small training/calibration sets in cross-conformal or jackknife+ conformal prediction compared to a standard cross-entropy baseline. The more advanced question is how to properly utilize conformal training for transductive conformal prediction.

Conformal risk training

During conformal training, we simulate standard conformal prediction on each mini-batch with the target of minimizing inefficiency while guaranteeing coverage $1 - \alpha$. With recent work on controlling other risks besides mis-coverage, for example RCPS or conformal risk, it would be interesting to explore "conformal risk training": How can we use conformal training to contorl arbitrary risks. Initial results seemed promising by making RCPS differentiable and simulating it on every mini-batch. But I would expect that using conformal risk works much better. The research question here is whether conformal training can be adapted to conformal risk and whether this improves inefficiency or has other advantages beyond that.

Semi-supervised conformal training

Interestingly, in each update step, conformal training does not need labels for all examples in the mini-batch. It only needs labels for half of the examples used for calibration. This means that we could use conformal training in a semi-supervised setting where we potentially have way more unlabeled examples than labeled once. The research question would be whether using additional unlabeled examples during conformal training can further improve inefficiency or can reduce the variation in inefficiency/coverage observed across calibration/test splits.

Conditional coverage using conformal training

In this recent paper, a new loss was proposed that allows to improve conditional coverage when using APS at test time. However, they do not exactly use conformal training as shown in Algorithm 1 by not differentiating through the calibration part of conformal prediction. I am sure that results could be improved when applying this loss for standard conformal training. Here, the research question is to reproduce the loss for conformal training and compare. Moreover, it would be interesting to see if a similar loss can be found for different conformity scores.

Robust conformal training

While this paper uses randomized smoothing to get coverage guarantees robust to adversarial examples, it is known that randomized smoothing benefits significantly from adversarial training, see here. Here, conformal training could be interesting. Can we device an adversarial conformal training procedure that, combined with randomized smoothing and conformal prediction, improves adversarially robust coverage guarantees?

Conclusion

Overall, I think there are several exciting directions to explore in terms of conformal training. This includes both simple research questions to get started with conformal prediction as well as more tricky problems. Thus, I hope that some these ideas are picked up and presented at upcoming conferences.