RESEARCH

Adversarially Robust Generalization and Flat Minima

Quick links: Pre-Print | Code | ICCV'21 Summary | ICCV'21 Slides | KDD'21 AdvML Short Paper | ICML'21 UDL Short Paper | ICML'21 UDL Poster | BibTeX

Abstract

Adversarial training (AT) has become the de-facto standard to obtain models robust against adversarial examples. However, AT exhibits severe robust overfitting: cross-entropy loss on adversarial examples, so-called robust loss, decreases continuously on training examples, while eventually increasing on test examples. In practice, this leads to poor robust generalization, i.e., adversarial robustness does not generalize well to new examples. In this paper, we study the relationship between robust generalization and flatness of the robust loss landscape in weight space, i.e., whether robust loss changes significantly when perturbing weights. To this end, we propose average- and worst-case metrics to measure flatness in the robust loss landscape and show a correlation between good robust generalization and flatness. For example, throughout training, flatness reduces significantly during overfitting such that early stopping effectively finds flatter minima in the robust loss landscape. Similarly, AT variants achieving higher adversarial robustness also correspond to flatter minima. This holds for many popular choices, e.g., AT-AWP, TRADES, MART, AT with self-supervision or additional unlabeled examples, as well as simple regularization techniques, e.g., AutoAugment, weight decay or label noise. For fair comparison across these approaches, our flatness measures are specifically designed to be scale-invariant and we conduct extensive experiments to validate our findings.

Paper

The paper is available on ArXiv:

Paper on ArXiv

@article{Stutz2021ICCV,

author = {David Stutz and Matthias Hein and Bernt Schiele},

title = {Relating Adversarially Robust Generalization to Flat Minima},

booktitle = {IEEE International Conference on Computer Vision (ICCV)},

publisher = {IEEE Computer Society},

year = {2021}

}

Short papers part of the ICML'21 Workshop on Uncertainty and Robustness in Deep Learning (UDL) and the Workshop on Adversarial Learning Methods for Machine Learning and Data Mining (AdvML):

UDL'21 Short Paper AdvML'21 Short Paper

Presentations

ICCV'21 Slides

Posters

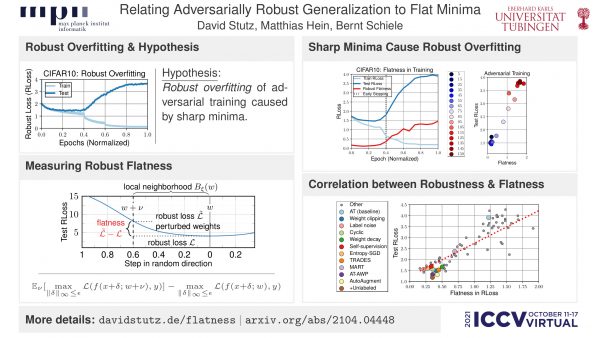

ICCV'21 summary slide:

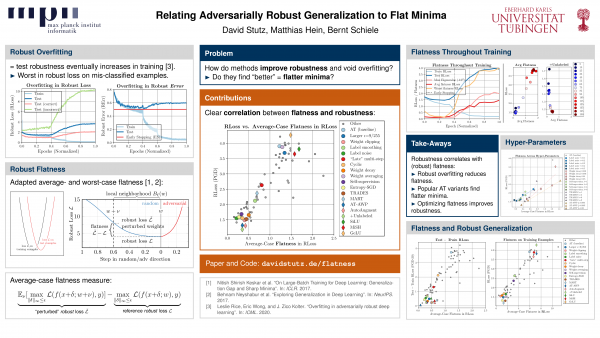

ICML'21 UDL workshop poster:

Code & Data

The code can be found on GitHub:

Code on GitHubNews & Updates

Oct 26, 2021. The code is now available at GitHub.

Oct 21, 2021. I talked about the paper at the Math Machine Learning Seminar of MPI MiS and UCLA.

Jul 26, 2021. The paper was accepted at ICCV'21.