OPEN SOURCE

NYU Depth v2 Segmentation Tools

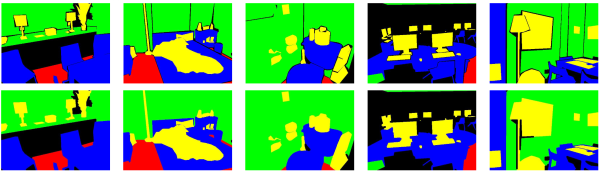

The below repository contains MatLab tools to pre-process the segmentation ground truth provided as part of the NYU Depth v2 dataset. As illustrated in Figure 2, the ground truth is processed to remove thin unlabeled parts that are problematic for evaluation.

The original NYU Depth v2 dataset is required and can be found here.

NYU Depth v2 Segmentation Tools on GitHubUsage overview:

convert_dataset.m: Used to split the dataset into training and test set according to list_train.txt and list_test.txt. In addition, the ground truth segmentations are converted to the format used by the extended Berkeley Segmentation Benchmark for superpixel evaluation.collect_train_subset.m: A training subset comprising 200 images is depicted in list_train_subset.txt and this function is used to copy all files within this subset in separate folders.collect_test_subset.m: Copies all files belonging to a subset of the test set in separate folders.

Also consider the corresponding MatLab files for detailed instructions.

- N. Silberman, D. Hoiem, P. Kohli, R. Fergus. Indoor segmentation and support inference from RGBD images. Computer Vision, European Conference on, volume 7576 of Lecture Notes in Computer Science, pages 746–760. Springer Berlin Heidelberg, 2012.

- D. Stutz. Superpixel Segmentation using Depth Information. Bachelor thesis, RWTH Aachen University, Aachen, Germany, 2014.

- D. Stutz. Superpixel Segmentation: An Evaluation. Pattern Recognition (J. Gall, P. Gehler, B. Leibe (Eds.)), Lecture Notes in Computer Science, vol. 9358, pages 555 - 562, 2015.

- X. Ren, L. Bo. Discriminatively trained sparse code gradients for contour detection. In Advances in Neural Information Processing Systems, volume 25, pages 584–592. Curran Associates, 2012.