RESEARCH

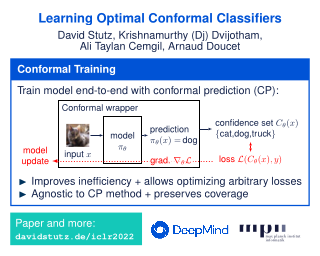

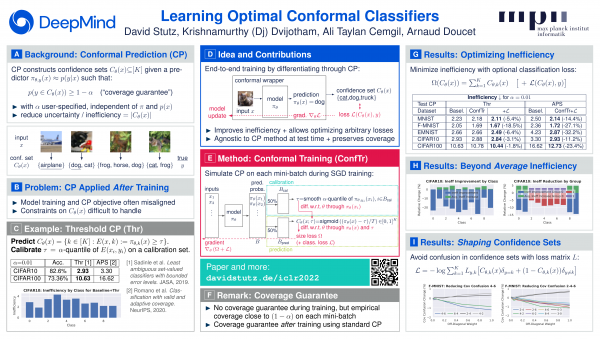

Conformal Training

Quick links: Pre-Print | OpenReview | Code | Slides | Poster | ICML'22 DFUQ Short Paper | ISDFS Talk

Abstract

Modern deep learning based classifiers show very high accuracy on test data but this does not provide sufficient guarantees for safe deployment, especially in high-stake AI applications such as medical diagnosis. Usually, predictions are obtained without a reliable uncertainty estimate or a formal guarantee. Conformal prediction (CP) addresses these issues by using the classifier's probability estimates to predict confidence sets containing the true class with a user-specified probability. However, using CP as a separate processing step after training prevents the underlying model from adapting to the prediction of confidence sets. Thus, this paper explores strategies to differentiate through CP during training with the goal of training model with the conformal wrapper end-to-end. In our approach, conformal training (ConfTr), we specifically "simulate" conformalization on mini-batches during training. We show that CT outperforms state-of-the-art CP methods for classification by reducing the average confidence set size (inefficiency). Moreover, it allows to "shape" the confidence sets predicted at test time, which is difficult for standard CP. On experiments with several datasets, we show ConfTr can influence how inefficiency is distributed across classes, or guide the composition of confidence sets in terms of the included classes, while retaining the guarantees offered by CP.

Paper

The paper is available on ArXiv:

Paper on ArXiv Paper on OpenReview

@inproceedings{Stutz2022ICLR,

author = {David Stutz and Krishnamurthy and Dvijotham and Ali Taylan Cemgil and Arnaud Doucet},

title = {Learning Optimal Conformal Classifiers},

booktitle = ICLR,

year = {2022},

}

Appendix F incorrectly states that we used 4 channels for our ResNets on CIFAR10 while we actually used 64.

A short paper contributed to the ICML 2022 DFUQ workshop can be found here.

Poster

The below poster is also available as PDF:

Poster as PDFTalks

This work was presented at the Seminar on Distribution-Free Statistics organized by Anastasios Angelopoulos:

Code

The code with a Jax implementation of conformal training can be found on GitHub:

Conformal Training on GitHubNews & Updates

Aug 17, 2022. Code is now available at GitHub.

July 23, 2022. A short paper was presented at the ICML 2022 DFUQ workshop.

Apr 6, 2022. The poster is now available.

Mar 7, 2022. The camera ready version is now on ArXiv.

Jan 20, 2022. Our paper was accepted at ICLR 2022!

Oct 18, 2021. The paper is available as pre-print on ArXiv.