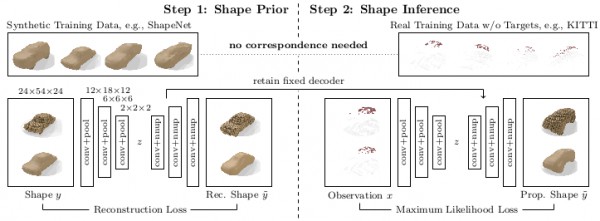

Figure 1 (click to enlarge): Overview of the proposed, weakly-supervised 3D shape completion approach.

Our CVPR'18 paper proposes a weakly-supervised, learning-based approach to 3D shape completion of sparse and noisy point clouds. We compare our approach against state-of-the-art data-driven and learning-based approaches [] on ShapeNet [], KITTI [] and ModelNet []. In the spirit of reproducible research, we release our revised code base including documentation as well as the extracted datasets for evaluation. The code also includes visualization and evaluation utilities.

The code and data is bundled in the following repository:

Code on GitHubThe paper is available as PDF:

Paper (∼ 2.7MB)Supplementary (∼ 4.9MB)

@inproceedings{Stutz2018CVPR,

title = {Learning 3D Shape Completion from Laser Scan Data with Weak Supervision },

author = {Stutz, David and Geiger, Andreas},

booktitle = {IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

publisher = {IEEE Computer Society},

year = {2018}

}

Code

The code is spread over multiple repositories that contain independent parts of the code but are included as sub-repositories:

| Repository | |

|---|---|

| daml-shape-completion | Shape completion implementations: amortized maximum likelihood (AML) (including the VAE [] shape prior), maximum likelihood (ML), Engelmann et al. [], and the supervised baseline (Sup). Implementations are mostly in Torch and C++ (for []). Installation requirements and usage instructions are included. |

| mesh-evaluation | Efficient C++ implementation of mesh-to-mesh distance (accuracy and completeness) as well as mesh-to-point distance; this tool can be used for evaluation. |

| bpy-visualization-utils | Python and Blender tools for visualization of meshes, occupancy grids and point clouds. These tools have been used for visualizations as presented in the paper. |

The above repositories contain the very essentials for reproducing the results reported in the paper, based on the data downloadable below. There are, however, some additional repositories containing related tools:

| Repository | |

|---|---|

| mesh-voxelization | Efficient C++ implementation for voxelizing watertight triangular meshes into occupancy grids and/or signed distance functions (SDFs). This tool was used to create the shape completion benchmarks as described below. |

Data

In our paper, we created three novel shape completion benchmarks: based on ShapeNet [], KITTI [] and ModelNet10 []. Here, we provide the data for the shape completion benchmark of cars derived from ShapeNet and KITTI. For ModelNet10, we created an improved benchmark for an extension of our CVPR'18 paper which will be made available later. The corresponding download links can be found in the repository or the table below.

| Download | |

|---|---|

| SN-clean (∼ 5.3GB) | This is the "clean" version of our ShapeNet benchmark; which means that we synthetically generated observations without noise which can be used to benchmark shape completion methods. |

| SN-noisy (∼ 3.8GB) | The "noisy" version of our ShapeNet benchmark, where we synthetically added noise similar to real data, for example on KITTI. |

| KITTI (∼ 2.5GB) | Our benchmark derived from KITTI; it uses the ground truth 3D bounding boxes to extract observations from the LiDAR point clouds. It does not include ground truth shapes; however, we tried to generate an alternative by considering the same bounding boxes in different timesteps. |

For details on the data formats, the process of generating the data, see the repository as well as supplementary material of our paper.

Models

For easy reproduction of our experiments, we also provide pre-trained models of the proposed approach (including shape priors) and the fully-supervised baseline:

| Download | |

|---|---|

| Models (∼ 224MB) | Pre-trained Torch models for the proposed amortized maximum likelihood (AML) approach (including the shape prior) and the fully-supervised baseline (Sup). |

- [] F. Engelmann, J. St ̈uckler, and B. Leibe. Joint object pose estimation and shape reconstruction in urban street scenes using 3D shape priors. In Proc. of the German Conference on Pattern Recognition (GCPR), 2016.

- [] A. Geiger, P. Lenz, and R. Urtasun. Are we ready for autonomous driving? The KITTI vision benchmark suite. In Proc. IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), 2012.

- [] A. X. Chang, T. A. Funkhouser, L. J. Guibas, P. Hanrahan, Q. Huang, Z. Li, S. Savarese, M. Savva, S. Song, H. Su, J. Xiao, L. Yi, and F. Yu. Shapenet: An information-rich 3d model repository. arXiv.org, 1512.03012, 2015.

- [] Z. Wu, S. Song, A. Khosla, F. Yu, L. Zhang, X. Tang, and J. Xiao. 3d shapenets: A deep representation for volumetric shapes. In Proc. IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), 2015.

- [] Gernot Riegler, Ali Osman Ulusoy, Horst Bischof, Andreas Geiger: OctNetFusion: Learning Depth Fusion from Data. CoRR abs/1704.01047 (2017).