Originally motivated by the Lindau Nobel Laureate Meeting, the Klaus Tschira Stiftung started to organize a similarly unique meeting specifically for methematicians and computer scientists. Instead of Nobel laureates, the event invites laureates who received at least one of the following awards: Abel Prize, ACM Turing Award, ACM Prize in Computing, Fields Medal and Nevanlinna Prize. In addition, roughly 200 young researchers, ranging from undergraduates (bachelor and master studies) over PhD students to young postdocs and professors, are invited to participate.

Application to the Heidelberg Laureate Forum usually ends in February and requires not only an up-to-date CV but also several recommendation letters and a statement of motivation. Application is open for bachelor/master students as well as PhD students and young postdocs. Personally, I found that the organizers did a great job in selecting a diverse set of young researchers — regarding discipline (mathematics/computer science), gender, country and career path. As a result, the forum hosted researchers from numerous countries, from academia and industry as well as students that just finished high school and young professors. In addition to laureates and young researchers, guests from academia — for example from the ACM and the International Mathematical Union — and press attended the forum.

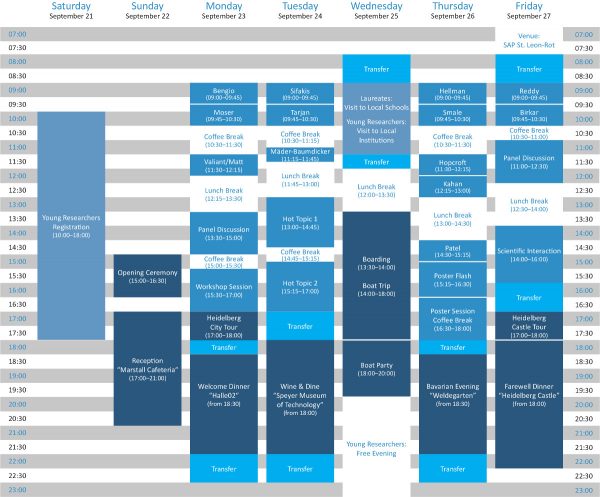

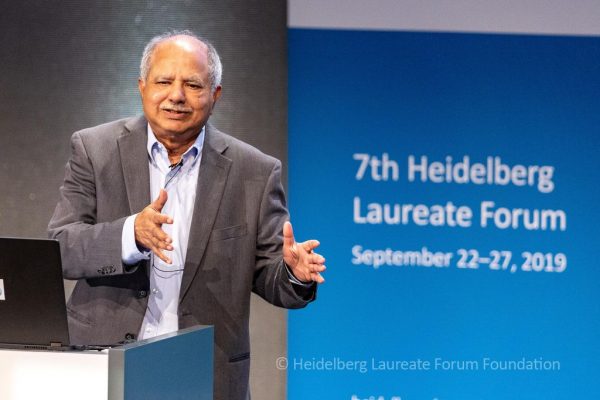

The structure of the forum, as shown above, is meant to foster exchange between young researchers and laureates. Besides the Turing Lecture, held by Yoshua Bengio, and the Lindau lecture, held by Edvard Moser, the laureates are free to choose any topic for their lectures. As a result, laureates mostly talked about their current research interests, or gave general advice regarding academic careers. In panel discussions, topics of interest for the whole research community are discussed. This year's panels discussed current problems in academic publishing or the gender gap in computer science and mathematics. Finally, many different social events such as the opening ceremony, several nice dinners, a boat tour on the Neckar or a visit at the Heidelberg castle provided a unique framework for networking.

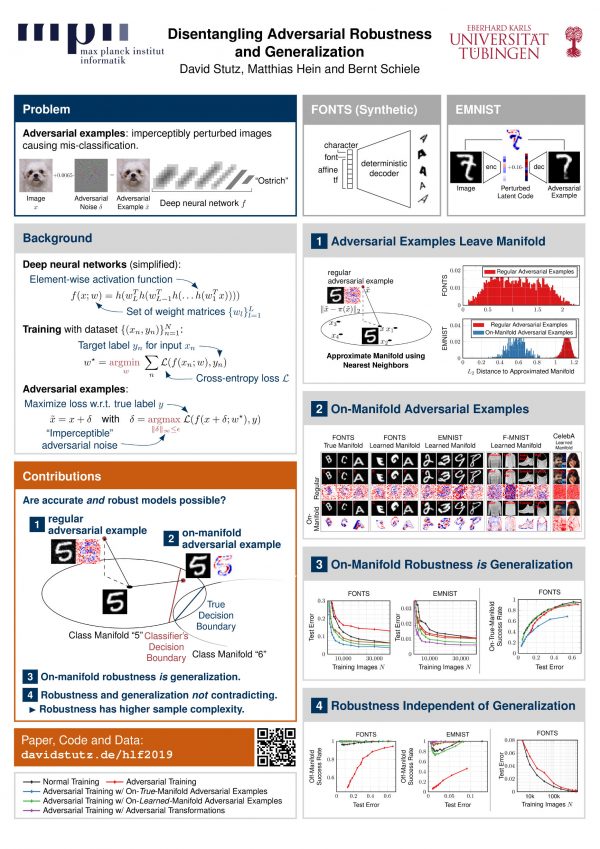

In this article, I want to share my general impressions of the event and talk about some selected lectures concerned with artificial intelligence and/or deep learning. In addition, my poster and the corresponding talk — from my work on adversarial examples [] — can be found below:

Impressions

More impressions can be found at the official Flickr account.

The Heidelberg Laureate Forum Foundation did an excellent job in organizing the event. As participant, I only had to book my train; Hotel, all meals as well as transfers from and to dinner/event locations were provided. Moreover, the schedule worked out perfectly, including plenty of lectures, panels and events while also leaving enough time for networking and snacks. The lecture hall was equipped very well — providing both slides and a video of the speaker. Questions could easily be asked using the provided microphones, which were used extensively using the panels on climate change, publishing and gender equality. All the social activities such as the boat tour on the Neckar or the visit of the Heidelberg castle were perfect opportunities to network. At the same time, every evening had a small agenda, for example short presentation regarding climate change or ethics in AI. And the event locations were picked thoughtful, such as the Technik Museum Speyer.

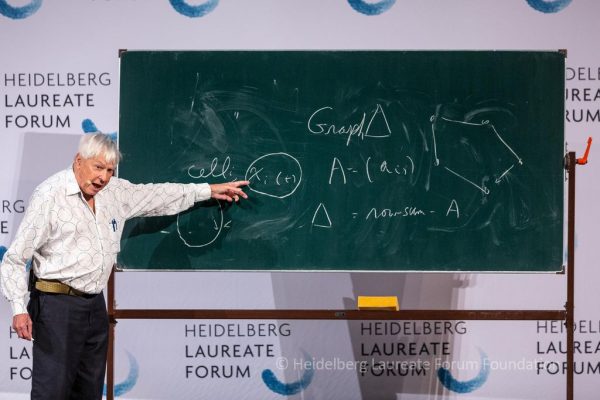

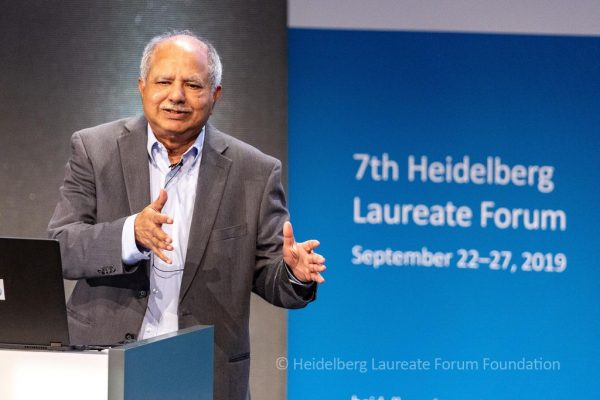

Figure 3: Impression from the 7th Heidelberg Laureate Forum 2019, showing several laureates and social events. These images were taken from the official Flickr account.

Lectures

In the following, I want to focus on AI and deep learning related lectures given at this year's Heidelberg Laureate Forum:

All lectures can also be found online at YouTube.

Yoshua Bengio: Deep Learning for AI. Yoshau Bengio gave his Turing Lecture highlighting his work and ideas regarding deep learning. In his talk, he motivated his interest in (deep) neural networks by the state of AI during his master studies. Back then, AI consisted mostly of rule-based, logical systems. Neural networks, in contrast, offered a way of proper learning by composing sets of simple computations, i.e., neurons. He also emphasized the importance of distributed representations and compositionality. For example, it was only recently proven that by composotionality, exponential many functions can be represented without requiring exponential many parameters. Throughout his talk, he touches on many recent developments and topics in deep learning: generative adversarial networks or attention mechanisms, to mention two. It was also stressed that deep learning, while performing very well, does not yet exhibit human-level of intelligence. As simple counterexamples, he spent several minutes discussing adversarial examples. Regarding future research, he finishes his lecture with several interesting thoughts for working towards real intelligence. For example, he discusses that it is still difficult to "learn" reasoning — he distinguishes explicitly between system 1 (intuition) and system 2 (reasoning) tasks, as also done by Daniel Kahnemann. Also, distributional shifts or out-of-distribution examples are still problematic, both concering learning without an i.i.d. assumption. Overall, Bengio was able to give a very brief insight into the history and current state of deep learning research with many interesting thoughts and ideas.

Joseph Sifakis: Can We Trust Autonomous Systems? In his lecture, Joseph Sifakis picked up the topic of trustworthy automonous systems — in the broadest sense. As a prominent example, he considers autonomous driving. Through several cases, he argues that we need a new foundation for integrating machine learning into systems. Here, the key question is how to assess or determine whether a machine learning powered system can be trusted or not. If this question cannot be answered, or the answer is insufficient, the system cannot replace a human and a symbiotic autonomous system is more sensible. This is particularly true for many todays systems, which Sifakis calls "best effort systems" — without any guarantees or trustworthiness. These considerations affect the whole life cycle of an autonomous system. For example, for aircrafts, software updates are not easily possible (as is often argued for autonomous cars) as the systems are usually certified. Overall, he gave an interesting lecture on what we as researchers and/or engineers should consider when building intelligent systems.

John Hopcroft: Research in Deep Learning. In his lecture, John Hopcroft stressed that now, as deep learning is achieving more and more impressive results, we need a theoretical framework for deep learning. Then, he focussses on adversarial examples in the context of the underyling data manifold. He asks very fundamental questions: how does a "cat" manifold look, which dimension does the manifold have? With regard to the manifold, Hopcroft also discussed some unintuitive properties of high-dimensional spaces that are often misleading. However, if we could mathematically model the manifold within the high-dimensional input space, we could also start proving "stuff" about how deep learning works. As an example, Hopcroft presents a very simple visual datasets, whose underlying manifold is known and could be mathematically described. As an alternative example where theoretical work on deep learning could be started, he describes how the loss surface during training can be simulated by aggregating simple, per-image loss functions. Overall, this lecture gave many interesting ideas that could be seen as "theoretical starting points" for investigating deep learning.

Raj Reddy: Grand Challenges in AI. In the 80s, Raj Reddy gave a list of grand challenges that should be tackled in AI — for example, see this article. In his lecture, he revised these challenges, sorted out the solved ones, and formulated a set of new challenges. In general, a grand challenge (often motivated by prize money) is a hard-to-accomplish, goal-directed research effort which can be measured by a set of distinct metrics. History has seen many of such challenges over the last few centuries. Out of his original 10 grand challenges, formulated in 1988, only 3 have been solved. Still, in his lecture he formulated a set of new, updated challenges. These include, for example, language to language and speech to speech translation. Here, he asks for a maximum error of 5%, demanding the support of the 100 most-spoken languages on earth. Similarly, he includes summarization of media or self-reproducing machinery — problems including many sub-problems to be solved. Overall, he reminds us that such challenges are important to direct global research efforts, and motivate out-of-the-box thinking.

Panels

This year's panels focussed on important topics within the research communities in computer science and mathematics:

Panel on Academic Publishing. This panel started by discussing open access publishing but included also many adjacent topics such as cost of publishing, reviewing and the "publish or perish" mentality. While every panelist agreed that open access publishing is the right thing to do, opinions diverged quickly when considering the details of open access. Personally, I found the provided details on how publishing nowadays work quite interesting. Currently, publishers mostly live from subscription models and by these numbers, every publication costs on average 3800$ — which seems way too much. On the other hand, while we as researchers easily accept travel and registration costs for conferences, many researchers do not want to pay for journal publications. Besides publishing cost, the panelists also disucssed reviewing; specifically, many panelists believe that we publish too incremental nowadays. This results in more and more papers, also requiring more reviewers. Reviewing, however, is not made attractive for a successful career in academia. Finally, the panelists discussed alternative metrics for hiring in academia that do not only consider citations and the number of publications. For example, data and code, could be taken into account — but a researcher will not get tenure by writing code nowadays. Overall, the panel revealed many problems in academia today without having good solutions for all of them.

Panel on Gender Gap. Considering the low percentage of female researchers in mathematics and computer science, this panel discussed various possibilities for more gender equality. Surprisingly, the discussion — including the questions from the participants — was held very constructive and did not loose focus. Personally, I found the remarks by Margo Seltzer most relevant. In order for academic hiring to be inclusive (both regarding gender as well as other under represented groups), the pool of candidates needs to be inclusive. For example, Canada requires these candidate pools to be inclusive, while the US does not. According to Seltzer, this already has a significant impact on hired faculty. However, for getting a diverse pool of candidates, she regularly has to asked multiple times: At first, male professors tend to recommend male researchers, thereby disregarding many excellent female researchers. Only at second thought, women and other under represented groups are recommended. Then, taking the best candidate from the pool, will already improve the gender balance at universities. Through similar examples, the panel gave many constructive ideas of how to change the academic culture in small steps.

Conclusion

Figure 4: The logo of the Heidelberg Laureate Forum taken from the official webpage.

To sum up, the Heidelberg Laureate Forum organizes a unique event allowing young researchers to meet esteemed laureates from computer science and mathematics. Through its structure, the forum fosters scientific as well as personal exchange without sacrificing the high scientific standard of the lectures and panels. Besides topics in computer science and mathematics, the forum encourages to think about the big picture of science and academia and its role within the society. Overall, I can only recommend to apply for the forum; application usually ends in February and is open for students ranging from undergraduate studies to post doctoral studies.

- [] David Stutz, Matthias Hein, Bernt Schiele. Disentangling Adversarial Robustness and Generalization. CVPR, 2019.